On 10 May 2016, thought leaders involved in the IMI GetReal initiative met in Bern, Switzerland for the webinar “Synthesis and integration of real-world evidence in network meta-analyses and outcome prediction”. The webinar gave participants an understanding of important methodological key deliverables achieved by the IMI GetReal team. More than 200 listeners tuned in to listen to the presentations and participate in the Q&A discussion that followed.

Part of the IMI GetReal project has been devoted to establishing a coherent framework for assessing and bridging the efficacy-effectiveness gap between treatment outcomes in clinical trial and real-world populations. The focus of this webinar was on the use of network meta-analysis for evidence synthesis and on the extension of its conclusions in real-world settings, using predictive modelling.

Starting off the discussion was Matthias Egger, Director of the Institute of Social and Preventive Medicine (ISPM), University of Berne, Switzerland. “The GetReal consortium is really about developing an understanding amongst health care decision makers on how real world evidence could be used in drug development,” he noted. “Today we are going to talk primarily about how evidence synthesis and modelling can be used to bridge the efficacy-effectiveness gap.” He outlined some key questions towards this end:

- How well can relative effectiveness be estimated from phase II and III RCT efficacy studies alone?

- How should RCTs, additional relative effectiveness studies and observational data best be integrated to address specific decision making needs of regulatory and HTA bodies at launch?

- How can relative effectiveness be predicted from available efficacy and observational data?

“These are the high-level issues we are grapping with in work package 4,” Egger concluded. Pointing to the further detail that needs to be addressed in this exploration, Egger highlighted the increasing level of detail that investigative questions pursue. From “how efficacious and safe is this drug” to “how effective and safe is this drug compared to alternative therapies, in the patients who will likely receive it post-launch?” to, finally: “How effective and safe is this drug compared to alternative therapies, in the patients who will likely receive it in the real world of a health care?”

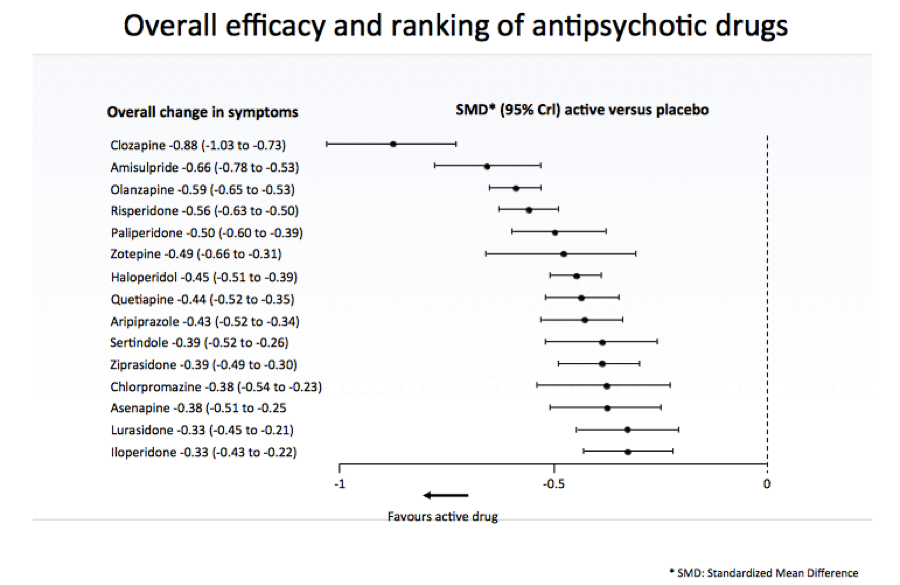

The next speaker, Georgia Salanti, Associate Professor, University of Ioannina School of Medicine, spoke on estimating and appraising treatment effects using randomised and real-world evidence. Pointing to a comparison of 15 antipsychotic drugs in schizophrenia as an example, Salanti described for participants how network meta-analysis works. “We want to put together all these randomised studies that compare different pairs of treatments and obtain a hierarchy of the interventions according to their effects,” she said, noting: “At the heart of network meta-analysis is indirect comparison.”

“What I want to emphasise is that network meta-analysis has the potential to estimate relative treatment effects for all pairs of interventions,” said Salanti, “even for those for which no randomised trial exists.” The results of network meta-analysis – the standardised mean differences of all active drugs versus placebo was presented according to rank for efficacy (For an up-to-date technical review of the methods used in this case, see GetReal in network meta-analysis: a review of the methodology. Efthimiou O et al. Res Synth Methods. 2016):

There was also evidence from a large observational study about five drugs available, noted Salanti. The assumption of transivity might be difficult to defend in the presence of both RWE and RCTs, she admitted, elaborating that studies have differences in inclusion criteria, methods and more—and that there might be discrepancies between direct and indirect evidence (statistical inconsistency) as well as between RWE and RCTs.

When it comes to choosing evidence versus an all-inclusive approach, “If differences are found, we try to explain them,” noted Salanti. This means checking for the effect modifiers, differences in included populations, and settings. More detail on these methods can be seen in the individual participant data (IPD) meta-analysis: a review of the methodology. Debray TP et al. Res Synth Methods 2015. In case of residual disagreement, she concluded, it is better not to discard RWE but to include it and explore the impact of the various degrees of credibility attached to the RWE.

While RCT’s are generally thought to have higher credibility, RWE tends to have higher relevance. Researchers are reluctant to put these two together at the risk of compromising the credibility or relevance of one or the other, noted Salanti. However, different assumptions about the credibility of RWE can be encompassed in a design-adjusted analysis; informative priors from RWE; or a three-level hierarchical model. Salanti elaborated on these possibilities using the case study of anti-psychotic drugs.

The take-home message, Salanti concluded, is that “If you are concerned about residual differences between RCTs and RWE, or if you think that RWE is less trustworthy than RCTs, decrease the influence of the RWE in your estimates by dividing the variance.” It is difficult to predict the magnitude or direction of possible biases introduced by including RWE in an NMA, she acknowledged, saying “We thus advise to explore the effect of placing different levels of confidence in the observational evidence before they draw final conclusions in a sensitivity analysis”. Further recommendations were to evaluate the risk of bias in the results after considering the relative contribution of each source of evidence in the pooled estimates, and to extend NMA with mathematical modelling to make predictions in a real-world setting.

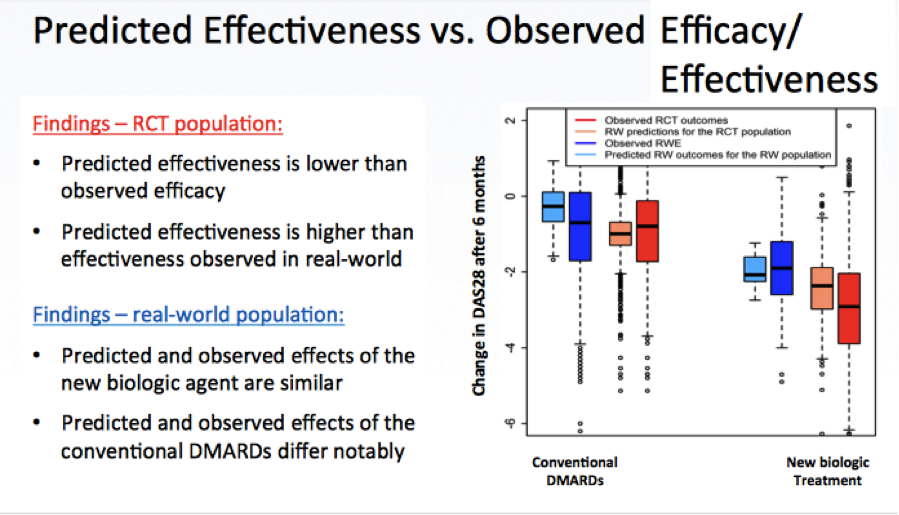

Following Salanti was Eva-Maria Didden, Postdoctoral research fellow at the ISPM, University of Berne, Switzerland, who presented a case study on rheumatoid arthritis. In her presentation she addressed the question of “How effective and safe is this drug compared to alternative therapies, in the patients who will likely receive it in the real world of a health care?”

The case study at hand asked the question of how to set up a mathematical model allowing for prediction of the real-world effect of a new biological treatment in patients with rheumatoid arthritis (RA) if:

- Only RCT data on the new treatment;

- No observational data on the new treatment is available;

- Observational data is available on an existing similar treatment.

The aim was to develop a mathematical model informed by observational evidence on treatment decision and RCT(s) on the efficacy of the new treatment, and on all significant effect modifiers and prognostic factors. From this, the real-world treatment effect for the RCT populations could be predicted—with prediction of treatment decision based on RWE and prediction of treatment outcomes based on using evidence from the available RCT(s). An overview of the results was provided:

Didden discussed additional details and questions as well, before concluding her presentation by highlighting the main deliverable: A Bayesian inference framework to connect information from various sources of data in a flexible way, so that RCT data and RWE can be connected. This also allows predicting real-world treatment effect and to assess and describe the efficacy-effectiveness gap. The main concerns she highlighted are the predictive and external validity. Further work is in progress regarding the inclusion of results from network meta-analyses to predict relative drug effectiveness, as well as the consideration of dynamic treatment regimes with time-varying confounders and censoring information.

Bringing the presentations to a conclusion was Christine Fletcher, Executive Director Biostatistics, Amgen Ltd. “We are trying to promote that good scientific principles can be followed to achieve a high-quality analysis… Evidence synthesis needs to be very well thought through” for it to succeed, she noted. She summarised the conclusions of the day’s discussion as follows:

- Relative effectiveness can be estimated from RCTs

- Key assumptions are required and should be evaluated

- Follow good scientific principles to achieve a high quality analysis

- New evidence synthesis methods enable RWE to be integrated with RCT evidence to aid decision making at product launch

- Consider the relative contribution of each source of evidence

- Use sensitivity analyses assessing different levels of confidence

- (Relative) effectiveness can be predicted from RCT and RWE using mathematical models and allows the efficacy to effectiveness gap to be assessed

- Regard RCT and observational data as complementary sources of evidence

- Model validation is key to increase accuracy of predictions

Following the conclusion of the presentations, a Q&A allowed participants to ask further questions. A video recording, including coverage of the Q&A, and all presentations from the webinar can be viewed here.